OUR WORK

HAVE A LOOK AT OUR PROJECTS

Our mission is to apply innovative methods to uncover violations and provide information to help shape regulatory policies because we work towards a world where algorithms are accountable, adjustable and avoidable. In other words, we are the digital detectives who shine a light on hidden algorithmic injustices, and work to bring accountability and transparency to the tech industry.

TOPICS OF INVESTIGATION

Our core expertise is data-driven algorithmic auditing. Our custom evidence collection infrastructure allows us to automate algorithmic audits at scale, also on mobile.

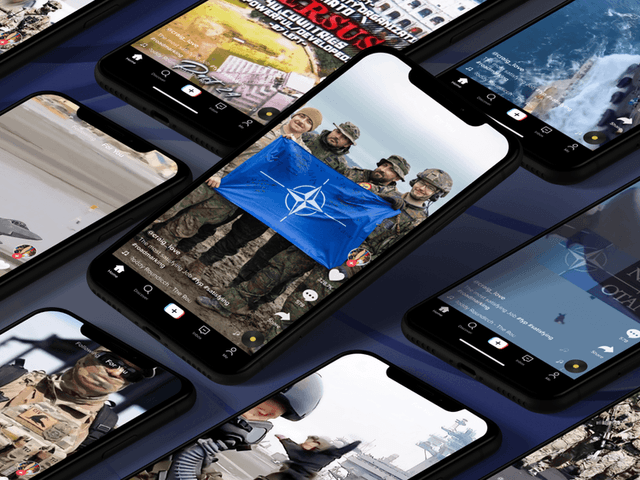

We maintain a strong focus on recommender systems, which remain the most influential curators of online content. Beyond that, we are also starting to scrutinize generative AI systems that are already deeply transforming how we access and produce information.

Both for recommender systems and generative AI systems, we primarily focus on election integrity monitoring due to the immediate impact on democracy and fundamental rights. Moreover, electoral processes are central in the DSA and the EU AI Act, allowing us to disseminate our findings in support of our policy and advocacy efforts.

OUR MISSION

We hold influential and opaque algorithms accountable by exposing algorithmic harms, to increase power and agency for the many, not the few.

THE PROBLEM

Our mission is to apply innovative methods to uncover violations and provide information to help shape regulatory policies because we work towards a world where algorithms are accountable, adjustable and avoidable. In other words, we are the digital detectives who shine a light on hidden algorithmic injustices, and work to bring accountability and transparency to the tech industry.

OPAQUE ALGORITHMS LEADING TO LOSS OF AGENCY

Complex set of proprietary algorithmic systems are governing and shaping many aspects of our public and private lives today. The lack of transparency and interpretability of algorithmic systems significantly compromises our agency and the protection of our rights.

PLATFORM ECONOMY FAVOURS CONCENTRATION OF RESOURCES AND POWER IN THE HANDS OF A FEW

Algorithmic systems don’t operate in a vacuum; they are born into the platform economy. The current dynamics of the platform economy favours economies of scale due to network effects. This in turn leads to a concentration of power (including access to computing, talent, data) for big platform companies. Platform companies anti-competitive conduct and vertical integration of smaller companies and other products limit the users’ ability to change provider - decreasing our collective bargaining power to call for a digital ecosystem that aligns with our interests.

BIASED ALGORITHMIC SYSTEMS EXACERBATE EXISTING INEQUALITIES

Algorithmic systems have been shown to display bias and harm on the basis of factors such as gender, race, class, and others. Favouring groups and demographics historically holding power amplifies the marginalization of certain communities at scale - negatively impacting their access to rights, privileges, economic and social opportunities.

PLATFORMS DO NOT HAVE ANY BENEFIT OF BEING HELD ACCOUNTABLE AND ADVERSARIAL ANALYSIS IS OSTRACISED

Even when these companies choose to provide research insights, they often limit research access or offer inadequate data, effectively concealing the true extent of online harms. For example, platforms like Twitter have imposed barriers to access by making their application programming interface (API) unaffordable for researchers. Additionally, TikTok has been reported to hide hashtags and other potentially harmful content data. Not only platform companies have significant lobbying power, they also use legal retaliation and obfuscation to block adversarial audits jeopardising scrutiny.

.jpg&w=640&q=75)